Organizations cause their own problems according to Sidney Dekker, the author of Drift into Failure: From Hunting Broken Parts to Understanding Complex Systems. Dekker writes, “Failure is not so much about breakdowns or malfunctioning of components, as it is about an organization not adapting effectively to cope with the complexity of its structure and environment.”

In his book, Dekker provides example after example of organizations that drifted into failure and why. Although none of Dekker’s examples are directly related to IT, that did not prevent Lance Hayden, managing director of the Berkeley Research Group and author of People-Centric Security: Transforming Your Enterprise Security Culture, from using Dekker’s theory to build a case as to why organizations in the IT industry are just as prone to drifting into failure, in particular, security failure.

Why do strategies fail?

In People-Centric Security, Hayden asks the question, “Why does one organization’s security strategy seem to protect the organization, while another’s efforts fail miserably? We seem to know everything about how information security works, except how it actually works.”

Hinting at a reason, Hayden quotes management expert Peter Drucker: “Culture eats strategy for breakfast.” To which Hayden adds, “Too often, security programs searching for the reason they failed in their technology or their strategy are simply looking in the wrong places for answers.”

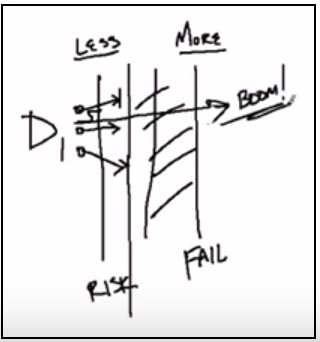

To explain what he means by wrong places, Hayden developed an interesting concept he calls Security Drift, “A metaphor as to why security often seems to fail repeatedly, despite our best efforts.” In this YouTube video, Hayden explains Security Drift using the following descriptions (and the diagram):

- Design (D1): A system (a piece of tech or an organization) designed to accomplish some outcome.

- Failure of Security (Fail): The failure line (analogous to the edge of a cliff) is some unknown point in an organization’s event horizon.

- Risk Tolerance (Risk): Security failure is hard to predict, so the Risk line (analogous to a fence) creates a buffer zone for the needed hedge.

- Do More with Less (More, Less): Hayden alludes to the fact that companies are making choices (trade-offs) between security and other priorities including profitability, productivity, and usability.

The “Boom” arrow in the diagram shows what happens when the buffer zone is not enough to prevent a security breach. Now let’s look at how Security Drift’s concepts might apply in a real-world scenario:

Data center example

Bob is building a new app that requires access to systems in the commercial data center Bob’s company uses. Testing the app, Bob notices some connectivity problems and calls the data center (D1) for help. Allan, the data center’s firewall administrator, informs Bob additional connectivity through the data center requires a formal network security review of the app (Risk).

Bob, realizing that this review is going to blow his app’s launch date and most likely his bonus, appeals to his manager, mentioning security reviews have been completed, and the app’s code is solid. Bob’s manager forces the issue with the data center’s CIO and an exception is granted (Less). Allan is told to open up a range of ports through the firewall for the app (first horizontal arrow). When the app goes live, everything seems to work fine, and nothing blows up. Bob is vindicated, and Allan breathes a sigh of relief and soon forgets about Bob’s app (More).

Security is not unique; it is just another complex system. And this type of slow movement towards failure, brought about by competing imperatives that prove incompatible with an organization’s strategies and goals, can be see in any complex system.

Lance Hayden

A few months later, Bob has a similar request. Allan, annoyed that Bob is yet again circumventing the process (Risk), is nonetheless overruled. Bob drives revenue, (More) and Allan gave an exception once (Less) and everything was fine. So the list of exceptions grows, the number of open ports increases, and the data center’s attack surface widens (additional horizontal arrows that move the Risk line closer to the Fail line).

Eventually, an attacker manages to use one of the open ports to subvert a different application in the data center and exfiltrates sensitive client information (Boom). The breach becomes public, and an investigation traces the incident back to dozens of exceptions that were made by Allan.

Allan catches most of the heat, and barely manages to keep his job. A new network security system is put in place, this one designed to require higher level approvals so that the earlier mistakes are not repeated. Everyone moves forward.

A year later, Allan works for a different company. Bob is now a director. The security breach of the previous year is nearly forgotten, and the company is looking forward to launching its new, completely redesigned app in weeks.

One day, Bob’s lead developer, in near panic, calls him. The developer tells Bob the launch may have to be delayed, mentioning, “The people at the data center are insisting their procedures require them to do a full network security review before they open up the ports we need. That’s going to take weeks.”

“This is a multi-million dollar launch,” Bob replies, looking for his VP’s phone number. “I think we can get an exception this one time….”

In hindsight

Hayden uses similar scenarios when give gives presentations. “When I give a presentation, I often see heads nodding in agreement in the audience,” he says. “The drift analogy obviously resonates with people I talk to, who see within it their own situations and struggles.”

Hayden goes on to mention that drift explains more than just information security’s struggle to maintain an effective protective posture. “Security is not unique; it is just another complex system,” he mentions. “And this type of slow movement towards failure, brought about by competing imperatives that prove incompatible with an organization’s strategies and goals, can be see in any complex system.”

More or less as one goal is optimized, the risk of failure increases for another, and security seems to get the short stick more often than not.